How I ended up being one of the first people to take the genetically modified mouth bacteria

I swished the vial of bacteria around in my mouth for two minutes and then spit it into the kitchen sink.

“You’d better eat something with sugar now to help the bacteria colonize your mouth,” Aaron suggested.

“How about this leftover cake from the BBQ yesterday?”

“That works.”

While I was completing the cake-consumption part of the procedure, Aaron informed me that I was maybe the ~25th person in the world to get this genetically-modified anti-cavity bacteria.

I first met Aaron, the founder of Lumina Probiotic, a few days prior in the Miami airport. I was waiting to board the plane that would take me to Roatán, Honduras for the first time. We and a group of other attendees were headed to Próspera for a conference about DeSci & Longevity. It was November 2023.

It was a small conference, and we all got to know each other. We spent several days learning and discussing unconventional advancements in health research and the research process itself. As a non-institutional scientist, I had come for the DeSci discussions, but I also enjoyed learning about longevity science, biotech innovations, and the challenges with FDA approval.

On the second to last day, Aaron, who was coincidentally staying in the same rental house as me, offered me a vial of the stuff. I thought about it for a day, and decided to go for it.

Why did I do it

I’ve done my best to honestly reflect on why I determined this was a fine decision for me.

Firstly, I believed the treatment to be reasonably safe.

A few other people had done it already and been fine, I am probably like others, I will probably also be fine. (Argument from analogy)

After reading some of their documentation and literature review, I found their hypotheses about the treatment (and why it had previously failed to launch) to be reasonable. (Abductive reasoning)

I spoke extensively to the manufacturer and they seemed capable and knowledgeable to me. As a non-expert, I rely on the heuristic of trusting sources who have demonstrated expertise. (Appeal to ethos)

My logic regarding the safety may be flawed (e.g. potentially invalid assumptions, difficult to assess credibility as a non-expert).

That aside, the decision also depends on my individual situation and preferences. I’d recently treated some annoying cavities, I felt that there was a limited time window in which I had this unique opportunity, I’m generally healthy, and I am risk tolerant in my personality.

How different is this loose-seeming distribution method from FDA clinical trials?

As you gather information in the process of releasing a new treatment, you are continually balancing leveraging the existing evidence with the need for further exploration and validation. You need more data in order to know if it’s safe; you want more safety assurance in order to know if you should keep collecting data.

In my former career as a data scientist, I was a big fan of the multi-armed bandit problem, and particularly a solution to it called Thompson sampling.

The basic idea of the problem is that you’re in a casino, and each slot machine has a different chance of payout, but you don’t know which one is the best. It’s the classic exploit-explore tradeoff. Do you keep playing the machine that's currently giving you the best results (exploitation), or do you try out other machines to see if they have better payouts (exploration)?

The beautiful Thompson sampling algorithm balances exploitation and exploration by basing the decision on a probabilistic distribution of past payout data for all the machines. This way, you are more likely to select the better machine, but it’s not guaranteed – and the odds increase as you gather more evidence for it being better.

Clinical trials don’t generally work like this. The decisions are more binary – is it good enough to move to the next phase or not? Phase 1 judges safety in a small group (20-80 people), then Phase 2 judges efficacy in a larger group (100-300 people), and Phase 3 tests it on a larger and more diverse population before it is finally released to the public. It’s a step function rather than a continuous release.

Each of these phases requires volunteers. To increase participant safety, the FDA enforces informed consent, preclinical testing, and other risk mitigation measures. But none of these precautions changes the fact that someone has to be the guinea pig and take the treatment first.

How to get past tradeoffs

Regulatory systems struggle with a tradeoff between minimizing risk and maximizing innovation.

I often hear people make both of these valid-sounding arguments:

Obviously we need safety guardrails! Look at X, Y, and Z examples where people died or were hurt by snake oil products.

Obviously regulation holds us back from innovation and causes unnecessary death and suffering! Look at how X, Y, and Z regulatory bodies (cough FDA cough) have all this superfluous red tape. (This is the common opinion of people in Próspera.)

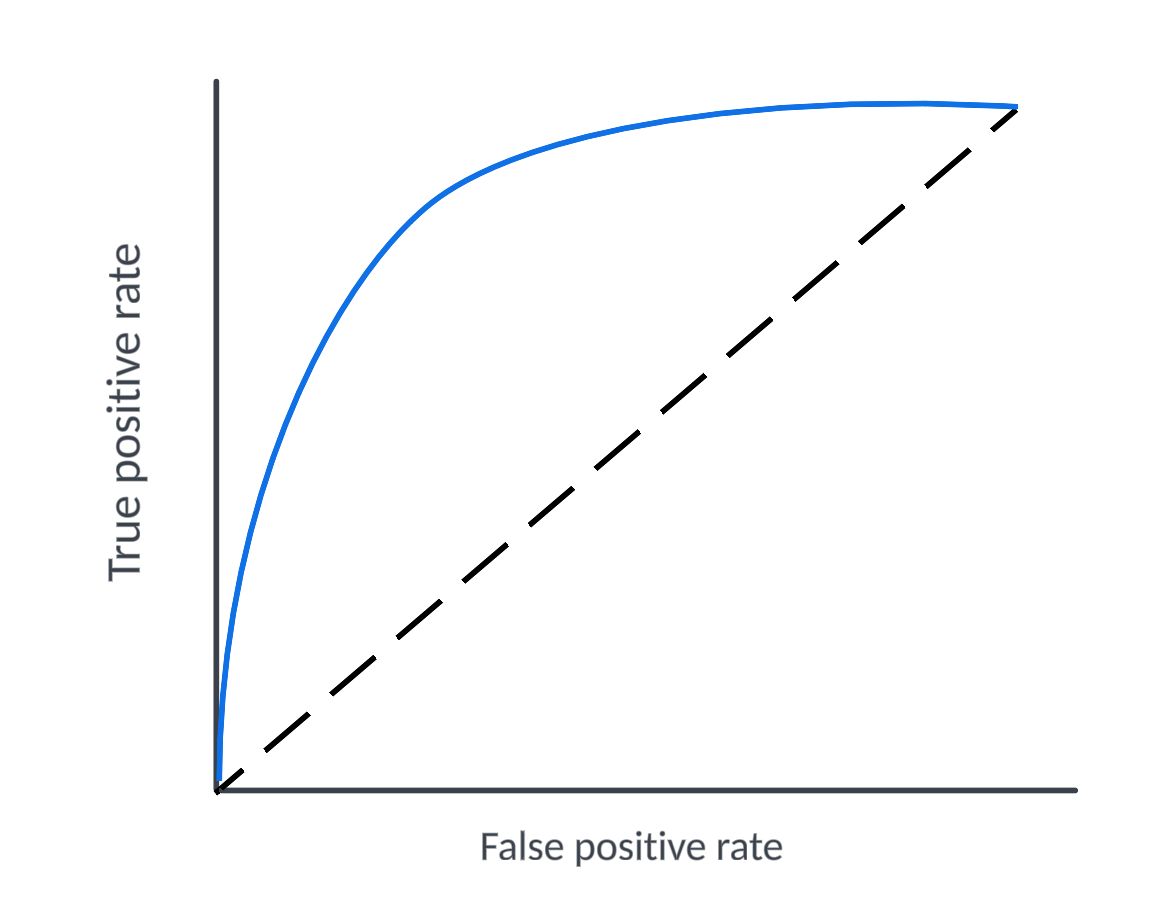

In machine learning, this tradeoff is analogous to the tradeoff between minimizing false positives and maximizing true positives. Increase the threshold of your binary classifier, and you catch more of the true positives, but you also catch more false positives in your net. A cancer screening that errs on the side of caution will more frequently indicate cancer in people who don’t have it.

In both machine learning and in clinical research, the trade-off between maximizing true positives and minimizing false positives is visualized by an ROC curve.

Each point on the curve represents a different threshold, plotting the true positive rate against the false positive rate. A good model maximizes the area under the curve (AUC) by achieving a high true positive rate with a relatively low false positive rate across thresholds.

Analogously, we can use this concept to visualize the challenge of regulation -- How do we enable innovation while maintaining sufficient safety standards?

This is the problem that initiatives like Próspera should solve. It’s not just about moving the threshold towards more innovation (a1 to a2). It’s about shifting the entire curve and creating a better model that lessens severity of the tradeoff (model A to model B).

But how do you actually achieve a better model? I don’t know, but I think it starts with a clearer picture of the problem. We need to look tradeoffs directly in the eye. We need to acknowledge that we are always accepting some level of risk, whether the first participants are FDA trial recruits or enthusiastic biohackers. We need to suggest system improvements along with our criticisms. We should support the ethos of taking action in the face of uncertainty.

I am so glad to see you writing on Substack! :)

Curious how you think this logic/approach would apply to covid vaccine trials!